2Sync

CTO & Founder

2Sync is the responsive design tool for Extended Reality:

Our SDK enables immersive, free-roam MR apps that auto-adapt to any physical environment instantly.

By mapping and integrating the real world into the virtual experience,

the 2Sync SDK allows true spatial immersion, combining the benefits of free movement,

passive haptics, and reduced motion sickness.

See more here.

Mercedes-Benz Tech Motion GmbH

Software Engineer

Software Engineer in the field of virtual engineering for R&D projects. Working on in-car AR/VR experiences.

University of Otago

Intern

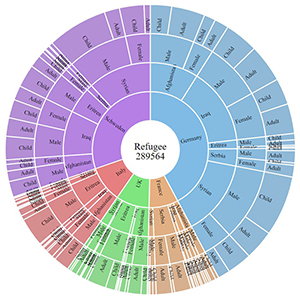

Additional information about current live sports games (like soccer or rugby) in the TV is already ubiquitous. With the departure of computer science at the University of Otago, we are working on an AR project to bring this additional information (like player stats, heat map, offside line ...) directly to the user's phone in the stadium.

Hasso Plattner Institute

Master thesis

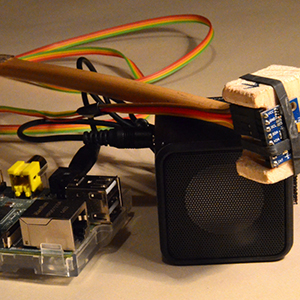

Current passive haptics VR experiences only run with a premeditated set of physical props, preventing such experiences from running anywhere else. During my master thesis, I developed with a small team at Hasso Plattner Institute in Potsdam a software system that allows passive haptics experiences to run on different sets of props, such as physical objects found in the home. My system accomplishes this by allowing Experience Designers to define the virtual objects in their experience using a generic format. This format allows the system to run the experience in a wide range of locations by procedurally modelling virtual object sets to match the available props.

You-VR

Software Engineer

In context and beyond of my compulsory internship at the small Start-Up 'You-VR' I started to work as a Software-Engineer. We developed a multisensory location-based multiplayer VR-Experience in Berlin. In the context of the VR NOW CON our experience got nominated for the interactive VR experience award (see: https://www.vrnowcon.io/awards/).

TU Chemnitz

Student assistant

Implementing visualizations and tools for an autonomous driving project.

Keio-NUS CUTE Center in Singapore

Research assistant

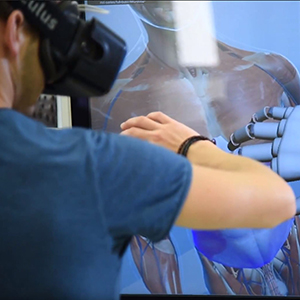

Directly after my Bachelor present, I worked for the Keio-NUS CUTE Center in Singapore as a research assistant. I started to support their Virtual Interactive Human Anatomy (VIHA) project and finally, I got my own project, where I was responsible to create an Unity3D based plugin, that allows to easily add hand (Leap Motion) or controller (VIVE) interactions to any project without coding skills. The user could choose from a predefined set of triggers (grab, pinch, recorded gestures, ...) and a predefined set of actions (attach, throw, slice, explode, ...) to enhance their VR environment with interactions.

Bauhaus University in Weimar

Bachelor thesis

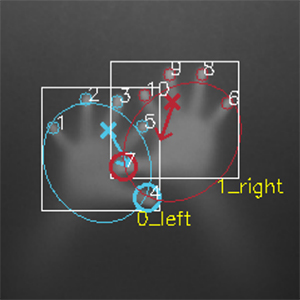

In the context of my bachelor thesis, I implemented and evaluated a software system to increase the robustness of hand and finger tracking for multitouch-tabletops. Compared to other similar systems like the tabletop "PixelSense" of Microsoft and open source projects like Community Core Vision 1.5 and reacTIVision my system produces more robust results. Additionally, my software system is able to identify hands and assign them to different users.

Bauhaus University in Weimar

Student assistant

Between October 2014 and July 2015 I have been student assistant at the VR-Laboratory of the Bauhaus University in Weimar.